LXD: Prefer IPv6 addresses for outgoing connections

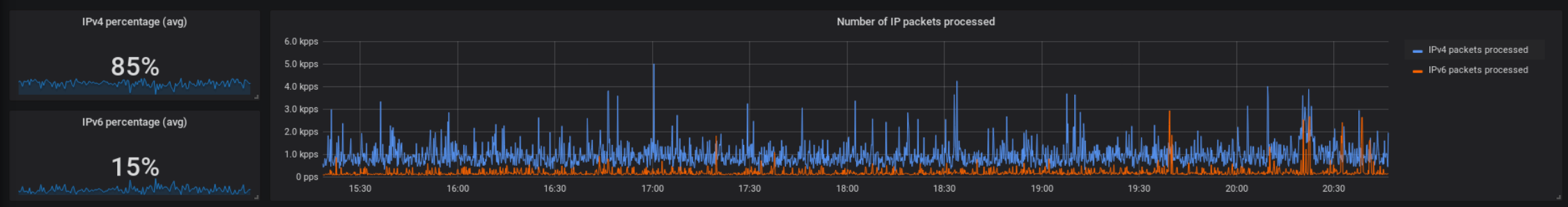

If you follow my Mastodon account, you might know that I like IPv6 very much. I’m trying to do my best to offer all my services via the new IP protocol. Lately I was investigating the use of IPv6 in my server network. Unfortunately the result was quite disappointing:

Just 15 % IPv6 traffic? Strange. Google’s IPv6 statistics show much better numbers. How could that be? While debugging the problem, I put the focus on connections to my server. No problem there: All the services offer AAAA DNS records and are available via their corresponding IPv6 addresses.

Then it came to me: What if outgoing IPv6 did not work properly?

Some of my services create lots of server-to-server network connections, which means that a mistake in my “outgoing connections” configuration might be the reason why there are less IPv6 connections established then expected.

First I connected to one of my KVM machines and ran a simple ping test. ping thomas-leister.de showed that a IPv6 connection was initiated (and IPv6 was preferred over IPv4). Everything was fine. Second I connected to one of my LXD containers, which ran Mastodon. I tried the same, but instead of an IPv6 address, a v4 address showed up in the terminal. At the same time, ping tests to IPv6-only targets such as ipv6.google.com were successful.

I was running “dual stack” correctly, but for some mysterious reason IPv4 connections were preferred over IPv6 in all LXD containers - except for one. My XMPP server container preferred IPv6 as you would expect. How was it different from all the other containers?

It was the interface IP address, respectively the IPv6 range it was in. While my XMPP container had a public IPv6 address starting with 2a01, all the other containers had fd42 addresses from the private fc00::/7 address space. That is because by default LXD creates a private network in which all the containers reside. Their services are accessible via Web- or TCP proxies. The XMPP container, in contrast, was directly attached to the host’s public interface and therefore had public IP addresses.

While doing some research on how Linux decides which IP addresses to use for outgoing connections, I stumbled upon /etc/gai.conf and the following lines in it:

# This default differs from the tables given in RFC 3484 by handling

# (now obsolete) site-local IPv6 addresses and Unique Local Addresses.

# The reason for this difference is that these addresses are never

# NATed while IPv4 site-local addresses most probably are. Given

# the precedence of IPv6 over IPv4 (see below) on machines having only

# site-local IPv4 and IPv6 addresses a lookup for a global address would

# see the IPv6 be preferred. The result is a long delay because the

# site-local IPv6 addresses cannot be used while the IPv4 address is

# (at least for the foreseeable future) NATed. We also treat Teredo

# tunnels special.

Suddenly it was clear to me, why IPv6 connectivity was not preferred in most containers! It was because I was using private IPv6 addresses for outgoing connections. The configuration in gai.conf (gai = “get address information”) was telling Linux to prefer IPv4 over IPv6 connections via addresses from the private space.

The maintainers of gai.conf have good reasons for it, but for my use case I needed to change that.

This is the default configuration as it looked like in my Debian based LXD containers. As soon as all the label lines are commented out, the default settings are active (as shown).

#label ::1/128 0

#label ::/0 1

#label 2002::/16 2

#label ::/96 3

#label ::ffff:0:0/96 4

#label fec0::/10 5

#label fc00::/7 6

#label 2001:0::/32 7

You can see that the fc00::/7 IPv6 range gets treated separately. A short look at the default GAI config reveals that this behavior is not meant as default, but probably introduced by Debian developers. I restored the original GAI configuration by commenting in the first five lines:

label ::1/128 0

label ::/0 1

label 2002::/16 2

label ::/96 3

label ::ffff:0:0/96 4

#label fec0::/10 5

#label fc00::/7 6

#label 2001:0::/32 7

By this change, the fd42 IP addresses are not handled by the label fc00::/7 rule anymore, but by the label ::/0 rule, which gives them a higher precedence than IPv4 addresses (handled by the ::ffff:0:0/96 and ::/96 rules).

After my changes to the configuration file, ping thomas-leister.de showed the corresponding IPv6 address. The problem was solved!

Did it help to gain IPv6 usage in my networks? Not really. Maybe a little bit. But my statistics did not change perceptibly. That might be because it’s not only landline ISPs being lazy at enabling IPv6 routing …

By the way: “Why even use the private IPv6 address space?” you might ask and “why not directly bind it to the container and drop the HTTP Proxy / TCP proxy / NAT?”.

Well, it has to do with IPv4. IPv4 addresses are expensive, therefore I own just a small /28 subnet. It’s big enough to cover all my hosts with one public IP address, but not every single container. This is why I use Nginx in front of (most of) my application containers. It terminates TLS connections and then forwards requests to the private network, where all the containers live.

Now if I would bind IPv6 addresses directly to the container, I would end up in some sort of “asymmetric setup”: For IPv4 I would terminate TLS before the container - for IPv6 I would have to terminate TLS inside the application container. It would make maintaining the webserver configuration and TLS certificates twice as complicated. For this reason I decided to go with private networks and a proxy setup in front of it.

It’s not nice - but hopefully in the future, IPv4 addresses will go extinct and I won’t have to deal with this kind of problem anymore ;-)