Creating my own small CDN for my Mastodon instance metalhead.club

Since the big Twitter wave that flooded the Mastodon network and, more broadly, the Fediverse in the fall and winter of 2022, international users have been playing a bigger role for metalhead.club. The service is hosted entirely in Germany, and that was still the case until recently. However, with the increasing number of international members come new challenges: for example, the rapid delivery of content.

As long as users are mainly located in Germany and Europe, latency times to the “Full Metal Server” in Frankfurt are low. However, the situation is different for users from Canada, the US, and Australia, for example, of whom there are a significant number on metalhead.club. For these users, using metalhead.club was sometimes a bit of a test of patience, as videos and larger images in particular appeared on the website with a slight delay. I can only simulate the situation in the browser, but even a ping of more than 200 ms spoils the fun of scrolling through the timeline in some places.

Recently, a US user brought a specific problem to my attention when playing a video within the US. He could only stream the iFixit video with constant interruptions. This prompted me to finally consider a CDN – a content delivery network.

The purpose of such a network is to bring the content available to a user of a platform or website closer to the user, so to speak. This is not just meant metaphorically, but is actually a physical matter: even if we assume that internet nodes, signal repeaters, and other equipment do not introduce additional latency into the system on the route from here to the US, it is well known that light in undersea cables is not infinitely fast. This fact alone means that web content must actually be offered (physically) closer to the customer. A CDN therefore consists of many data center locations or servers that are installed close to users – ideally in metropolitan areas, where you can also benefit from a well-developed network infrastructure. Depending on the use case, this can be in certain regions of the world or even across the globe. The content is mirrored on all (or some) of the servers, depending on requirements. When requesting content, a US user is not redirected to an EU server, but automatically retrieves the content from a server in their vicinity (a US data center). This process usually remains transparent to the user. There are two established methods for determining the location from which content is accessed (and which server is responsible):

- Anycast-based CDNs

- “GeoIP”-based CDNs

Anycast-based CDNs

The first CDN type is the “gold standard” and enables very elegant (and accurate) routing: In principle, the same public IP address is assigned to several servers scattered around the world. The routing protocol BGP decides which server is actually addressed when a request is made. This protocol essentially determines the paths that an IP packet must take to reach its destination. In most cases, there are several ways to reach the destination. The routing system knows which one involves the lowest costs (or the lowest latency!). In this respect, the work is handed over to the routing system and it is relied upon to find the fastest route through the Internet. That is what it is there for – that is what it was developed for. The route does not always have to be the shortest geographically. If the latency on route A to server A is less than route B to server B, the IP packets are automatically routed to server A. This server then responds. The other servers that can be reached under the same IP address are not aware of the request. Incidentally, such an anycast is also behind all globally available DNS resolvers such as Google DNS, Quad9, or Cloudflare DNS. The IP address is always the same, but in the background, you are redirected to the nearest server without noticing.

GeoIP-based CDNs

The term GeoIP refers to a large database – a GeoIP database, such as those offered by companies like MaxMind (and used, for example, in my project watch.metalhead.club). The database is fed with publicly available routing information and stores which IP address ranges (and thus ASNs) are assigned to which companies. Once the ownership of an IP address is clear, conclusions can be drawn about the geographical area of use via the assigned company. IPv6 addresses that come from the 2003::/19 network (i.e., 2003:: to 2003:1fff:ffff:ffff:ffff:ffff:ffff:ffff) belong to Deutsche Telekom, for example. This also makes it clear where a user with such an IP address comes from when requesting content: from Germany. GeoIP databases store these relationships so that they can be queried: “Where does a user with the IP address xxx come from?” The database then provides a more or less accurate answer.

The accuracy of GeoIP-based CDNs

My experience with the free offerings of such databases has shown me that this works quite well at the country level, but it is usually not possible to locate users down to the city level. And perhaps that is just as well. There are better and worse databases. The most accurate databases are usually behind a costly subscription model. This is because GeoIP databases need to be maintained. IP addresses and subnets are traded and sold, especially in times of IPv4 address shortages, and sold to other providers. An address range that just belonged to an African mobile phone provider may belong to a small Asian company tomorrow. If the databases are not regularly maintained, the information becomes outdated and worthless.

And this is precisely where the major disadvantage of GeoIP lies. While Anycast-based CDNs rely on routing systems already knowing the shortest routes (in terms of latency), GeoIP relies on additional collected information. The assignment of IP address <=> owner is not difficult to determine and is public. The difficulty is much more likely to lie in correctly determining the type of use and region of use for an owner. After all, it could be a small business, but it could also be an ISP that only distributes the addresses to its customers. Only the owner or ISP knows how such distribution takes place and how large the geographical area of use is. Additional tracking information can be used to make this assignment more accurate. For example, thanks to smartphones, an online advertising company could combine GPS location and IP address to determine a user’s location more accurately. If this GPS information is then sold to the GeoIP provider, the latter can determine the location of the IP address much more accurately. But that’s another story…

How does a user get forwarded to the right server if Anycast is not used? It’s simple: via the Internet’s directory service – the DNS.

If you want to build a GeoIP-based CDN, you need a DNS server that returns the appropriate IP address for the user’s region. For each request to the DNS service, the IP address is determined and the appropriate target host is identified in the background based on a GeoIP database. I deliberately write “the IP address” because which IP address is examined depends …

Resolver IP or EDNS Client Subnet (ECS)?

One might assume that the address of the requesting user would be examined, for example, the public IP address of the smartphone or laptop, but this is not the case. This is because the name server responsible for my domain, e.g., metalhead.club, almost never sees this client. It is the DNS resolvers that request the correct IP for a service for the client – not the clients themselves. So all the GeoIP name server can evaluate is the IP address of the resolver.

In many cases, this means that the user themselves has not been located, but their DNS resolver can be identified. In most cases, for private customers, this is the standard DNS resolver of the customer’s ISP, for example Deutsche Telekom. In most cases, the location of the DNS resolver therefore roughly corresponds to the location of the customer – at least when viewed at country level. So if my name server sees an IP that can be assigned to a Deutsche Telekom resolver, I can usually assume that the user making the request is also from Germany.

However, there are also special cases: namely, globally available and ISP-independent, open DNS resolvers. For example:

- Cloudflare DNS

- Google DNS

- Quad9

- DNS4EU

These often do not allow conclusions to be drawn about a specific country. In order to still be able to roughly locate the customer actually making the request behind the DNS request, there is an EDNS extension called “ECS” (EDNS Client Subnet) that allows additional information to be sent to my name server: namely, the subnet of the original user. The resolver naturally knows this client IP and forwards it to my name server in anonymized form (only the subnet instead of the exact IP address). The name server can now use the subnet forwarded via ECS instead of the resolver IP for its GeoIP database and obtains a more precise result for the geographical origin of the request.

ECS is not supported by all public resolvers – DNS4EU, for example, does not yet support ECS – even though the feature is on the operator’s to-do list:

DNS4EU currently does not support EDNS, but we want to add support for this feature. Unfortunately, we do not have a timeline on when this should be done. Please check the project page https://www.joindns4.eu/learn for updates.

So what should you use? A CDN provider or self-hosting?

Anycast systems are expensive. Very expensive. And impossible for someone like me to implement. Because to do so, you would need to have your own IP address range for which you can define BGP routes yourself. So the issue was quickly settled for me. Anycast? I don’t operate it myself. But there is another option: After all, you can rent space in existing Anycast systems or simply rent CDNs. Renting Anycast IPs is also costly and is not offered in this form by the hosts I use or want to use.

However, freely rentable CDNs are a dime a dozen: BunnyCDN, Akamai, Cloudflare, KeyCDN, … these are just a few popular examples. These CDNs are often sold in combination with DNS services, S3 storage, or DDoS protection. There are also some offers that are affordable for small platform operators on the internet. I was particularly interested in BunnyCDN. For around €20-25 per month, I could have the approximately 2.5 TB of metalhead.club media played worldwide every month. However, there are two issues that currently concern me:

- I would have to relinquish TLS termination and have the media hosted externally. However, my users rely on me to keep all data in my own hands.

- The green electricity issue: It has become easier to find server hosts that operate exclusively with green electricity. Unfortunately, the situation is not so good with CDN providers: Only Cloudflare seems to use 100% green electricity.

This means that renting space in an existing CDN is also out of the question (for now?).

Building your own Geo-IP-based CDN

So the only option left is to build it yourself!

My expectations are relatively low. I am, of course, aware that a self-built GeoIP-based CDN will quickly reach its limits in terms of efficiency, accuracy, and performance. This is especially true if, like me, you plan to invest very little money in it. My primary goal is to improve the user experience for some of my metalhead.club members. I won’t achieve the perfect solution this way.

Since the supply situation within Europe is fine and latency measurements using online tools (more on that later!) have delivered satisfactory results, I have focused on two locations in particular: the American continent and Asia. With a total of three media servers for media.metalhead.club, this would roughly cover the entire globe.

Using the “Ping Simulation” tool, I looked at what latencies I can expect globally if I distribute the servers as I had imagined. Namely, as the server host Hetzner allows me to do with its cloud. I already have a Hetzner account and am familiar with their products – plus, they meet my requirements (German company, GDPR-compliant, 100% green electricity). And when it comes to locations, Hetzner offers exactly what I want. In addition to European data centers, there are also two in the US and one in Singapore.

The simulation showed that I can reach large parts of the world with around 100 ms or less. Of course, this always depends on the local provider, but the overall picture was fairly consistent and only in a few locations were the packet times significantly higher than 150 ms. So I quickly booked a cloud server in Ashburn (VA, USA) and one in Singapore with Hetzner.

Implementation

As far as vServers are concerned, I opted for the smallest and cheapest models offered by Hetzner. I wanted to start small and try it out first. As it turns out, the CPX11 cloud servers have been more than sufficient so far. They only need to cache and deliver static files – nothing more.

Nginx caching proxy

Only one Nginx instance runs on the servers, which

- … receives requests to media.metalhead.club

- checks whether the requested content is already available locally

- (if not, it is requested from the original S3 bucket and cached)

- returns it to the client

My configuration for Nginx:

proxy_cache_path /tmp/nginx-cache-metalheadclub-media levels=1:2 keys_zone=s3_cache:10m max_size=30g

inactive=48h use_temp_path=off;

server {

listen 80;

listen [::]:80;

listen 443 ssl http2;

listen [::]:443 ssl http2;

server_name media.metalhead.club;

include snippets/tls-common.conf;

ssl_certificate /etc/acme.sh/media.metalhead.club/fullchain.pem;

ssl_certificate_key /etc/acme.sh/media.metalhead.club/privkey.pem;

client_max_body_size 100M;

# Register backend URLs

set $minio_backend 'https://metalheadclub-media.s3.650thz.de';

root /var/www/media.metalhead.club;

location / {

access_log off;

try_files $uri @minio;

}

#

# Own Minio S3 server (new)

#

location @minio {

limit_except GET {

deny all;

}

resolver 9.9.9.9;

proxy_set_header Host 'metalheadclub-media.s3.650thz.de';

proxy_set_header Connection '';

proxy_set_header Authorization '';

proxy_hide_header Set-Cookie;

proxy_hide_header 'Access-Control-Allow-Origin';

proxy_hide_header 'Access-Control-Allow-Methods';

proxy_hide_header 'Access-Control-Allow-Headers';

proxy_hide_header x-amz-id-2;

proxy_hide_header x-amz-request-id;

proxy_hide_header x-amz-meta-server-side-encryption;

proxy_hide_header x-amz-server-side-encryption;

proxy_hide_header x-amz-bucket-region;

proxy_hide_header x-amzn-requestid;

proxy_ignore_headers Set-Cookie;

proxy_pass $minio_backend$uri;

# Caching to avoid S3 access

proxy_cache s3_cache;

proxy_cache_valid 200 304 48h;

proxy_cache_use_stale error timeout updating http_500 http_502 http_503 http_504;

proxy_cache_lock on;

proxy_cache_revalidate on;

expires 1y;

add_header Cache-Control public;

add_header 'Access-Control-Allow-Origin' '*';

add_header X-Cache-Status $upstream_cache_status;

add_header X-Content-Type-Options nosniff;

add_header Content-Security-Policy "default-src 'none'; form-action 'none'";

add_header X-Served-By "cdn-us.650thz.de";

}

}

By the way: Adding the X-Served-By header makes debugging and checking the function easier. For every image loaded from media.metalhead.club, it is very easy to determine which of the three hosts actually transferred the file. The header can be viewed in the developer tools of any web browser, for example.

GeoIP-based DNS zones

Now all that’s missing is the GeoIP component so that metalhead.club members are redirected to the appropriate server. At this point, I could have configured my own GeoIP/GeoDNS-enabled name server (as I have already done in a customer project), but I didn’t want to put too much effort into my experiment. So I looked around a bit and found a very interesting offer at Scaleway. There, you can rent name servers that not only support the GeoIP feature but can also be used with external domains (i.e., domains that are not registered with Scaleway). Depending on usage, such a service costs only a few cents to a low single-digit euro amount in my case. I’ll be able to say exactly how much once my CDN has been running for a while ;-)

However, I didn’t want to hand over my 650thz.de root zone to Scaleway. This should continue to be hosted by Core-Networks.de. Instead, I created my own zone “cdn.650thz.de” and set the Scaleway name servers as authoritative name servers. However, I first had to prove ownership of cdn.650thz.de to Scaleway. So I haven’t set the NS entries yet, but first created a verification entry in the metalhead.club zone:

_scaleway-challenge IN TXT 3600 "verifizierungs-string"

After about 30 minutes, it was confirmed that I had control over the domain, and I was able to start entering the Scaleway name servers for cdn.650thz.de in the 650thz.de zone at Core-Networks.de:

cdn 86400 NS ns0.dom.scw.cloud. cdn 86400 NS ns1.dom.scw.cloud.

(I deleted the _scaleway-challenge entry again)

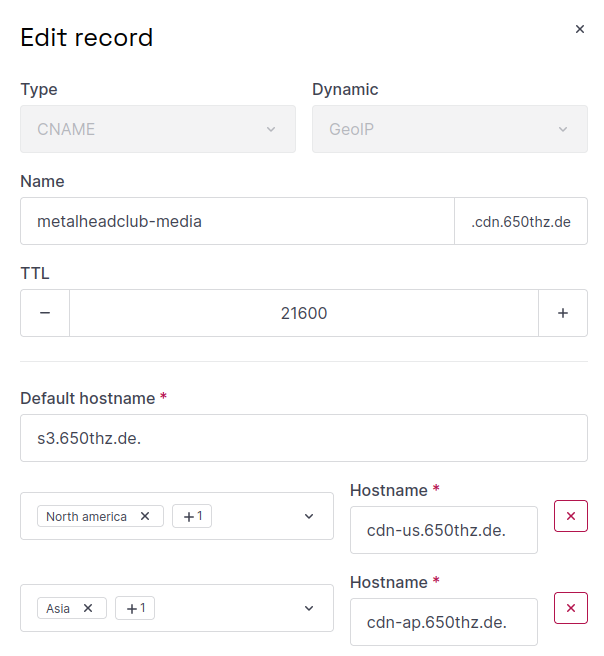

In the cdn.650thz.de zone, I finally added the GeoIP entry for metalheadclub-media.cdn.650thz.de:

The default entry points to s3.650thz.de – the previous S3 media server in Frankfurt. However, if a client location in North or South America is detected, the CDN server cdn-us.650thz.de is used instead. If a location in Asia or the Pacific region is detected, the CDN server cdn-ap.650thz.de is referenced.

Incidentally, the period at the end of each FQDN in the input mask is essential! If no period is set, an entry in the same zone is referenced – which cannot work.

Switching to CDN operation

In order to respond to all requests to media.metalhead.club with the appropriate media server, one more change was necessary: The entry for media.metalhead.club had to refer to metalheadclub-media.cdn.650thz.de in the metalhead.club zone file:

media IN CNAME 3600 metalheadclub-media.cdn.650thz.de.

(Again, pay attention to the final point!)

To test the CDN after the DNS change (and the previously entered TTL), I quickly ran an nslookup on media.metalhead.club from each of the servers:

- From s3.650thz.de: Returns own IP

- From cdn-us.650thz.de: Returns own IP

- From cdn-ap.650thz.de: Returns own IP

So the dynamic allocation worked!

How well does it work?

To get a broader picture, I ran further tests using the following tools, for example:

- CDN HTTP Response Time Test: https://tools.keycdn.com/performance

- CDN Test with IPs: https://www.uptrends.com/tools/cdn-performance-check

- BunnyCDN Ping Test: https://tools.bunny.net/latency-test

- CDNPerf Test: https://www.cdnperf.com/tools/cdn-latency-benchmark

I also asked metalhead.club users about this. A user from Australia had previously reported these ping times to media.metalhead.club:

64 bytes from 5.1.72.141: icmp_seq=0 ttl=38 time=369.765 ms

64 bytes from 5.1.72.141: icmp_seq=1 ttl=38 time=350.830 ms

64 bytes from 5.1.72.141: icmp_seq=2 ttl=38 time=469.047 ms

64 bytes from 5.1.72.141: icmp_seq=3 ttl=38 time=379.882 ms

64 bytes from 5.1.72.141: icmp_seq=4 ttl=38 time=400.998 ms

(That’s an average of just under 400 ms!)

After that, the latencies were significantly lower because he was correctly redirected to the US server instead of the EU server:

64 bytes from 5.223.63.2: icmp_seq=0 ttl=50 time=150.384 ms

64 bytes from 5.223.63.2: icmp_seq=1 ttl=50 time=230.931 ms

64 bytes from 5.223.63.2: icmp_seq=2 ttl=50 time=360.083 ms

64 bytes from 5.223.63.2: icmp_seq=3 ttl=50 time=142.986 ms

64 bytes from 5.223.63.2: icmp_seq=4 ttl=50 time=138.416 ms

64 bytes from 5.223.63.2: icmp_seq=5 ttl=50 time=300.561 ms

(264 ms on average).

Not earth-shattering, but still significantly less. The fact that this user still has a relatively high latency to the media storage is due to the fact that the distance from Australia to Singapore is still not negligible. This is where my small CDN immediately shows its weakness: With only three locations, you can’t achieve optimal performance - but you can at least achieve a small improvement.

For another Australian user, the latency improved from an average of 346 ms to 108 ms!

And for the user from the US I mentioned at the beginning? He was able to play the video in question smoothly and without any problems after the switch.

I used the BunnyCDN test to do a before-and-after comparison:

Before:

After:

Goal achieved!

Latency times have improved significantly for most locations around the US, Australia, Korea, and Japan. Europe remains unchanged, as expected. However, there are also some unexpected outliers that may have fallen victim to incorrect IP localization, for example in Singapore itself (interesting!), Turkey, India, the US state of Texas, and Chile.

Of course, the results also depend heavily on the IP addresses used for testing and the local connection. Turkey may already have been classified as an “Asian region,” and the location from which testing was conducted in Texas may have been identified as European.

I will continue to monitor the results. Since the measurements here were primarily taken from data centers (and not from private homes in the corresponding ISP areas), the results may not reflect the whole truth.

So, one might rightly ask: …

Can you rely on GeoIP?

When testing my CDN with the test websites mentioned above, I discovered that the server contacted didn’t always correctly match the test location (see the result for the Singapore location!), so I surveyed my users. The results from these web tools are probably not particularly accurate. I suspect that the mechanism works much better for end-user address ranges than for data center address ranges. After all, GPS and other location information can be “fused” much more easily for residential IP addresses.

And indeed: In almost all cases, users were assigned the correct media server based on their origin. See: https://metalhead.club/@thomas/114676148254141233 (I probably should have added a poll option to this post…)

The last manual count on June 15, 2025, showed:

- For 83 users (most of them from Germany), the allocation worked.

- For 4 users, it didn’t work. 3 of them were redirected to the USA instead of Frankfurt - one user was even connected to Singapore, even though they were in Germany.

I asked Scaleway Support which GeoIP database was used and how often it was updated. I received the following response:

There can occasionally be discrepancies, such as the one you experienced with the Singapore data center being identified as European. It can take time for GeoIP databases to reflect changes like IP reallocation. Our GeoIP database provider involve frequent updates, often on a weekly or bi-weekly basis. While we don’t publicly disclose the specific third-party GeoIP database provider we use, we rely on a reputable industry-standard provider to ensure the highest possible accuracy for our customers. We understand that accurate GeoIP routing is crucial for latency-sensitive applications. If you encounter significant and persistent inaccuracies, we encourage you to report them to our support team so we can investigate further.

I also asked whether Scaleway GeoIP uses only the resolver address for positioning, or whether it also evaluates the EDNS ECS field. This would improve accuracy:

Our product team has got back to us to indicate that you are right. First we use the EDNS/ECS feature and if it’s not available we use resolver’s IP address.

What’s next?

An Anycast-based CDN could certainly achieve significantly better results, but for the reasons mentioned above, that’s not a viable solution for me at the moment. Since my small GeoIP CDN achieves an improvement in most, but not all, cases, I’ll continue the experiment. Perhaps there are still some adjustments I can make to improve localization.

Otherwise, I’m also toying with the idea of possibly switching to a more professional, third-party hosted AnyCast CDN. However, then Cloudflare would be the only provider I could rely on. I’m not willing to give up the “100% Green Energy” label of my services for a CDN.

Overall, I can say that my GeoIP CDN helps improve access for most remote users. However, there are limitations:

- The appropriate CDN server is not assigned in all cases (inaccuracy of the GeoIP database).

- To achieve a latency advantage, a specific content must already have been accessed by another user in the region (content is not (yet?) kept synchronously across all CDN servers => more storage required).

- Only media content is hosted by the CDN. API services and the web frontend are still hosted only in the EU.

Further Improvements: DNS Optimization

After rolling out my CDN, I discovered through performance tools that DNS name resolution, especially for remote users, accounts for a large portion of the overall load time. I described how to improve this in this follow-up article: “Speeding up global DNS resolution by eliminating CNAMES”